Updates 2025/Q1

Life and project updates from the current consecutive three-month period. You might find this interesting in case you’re using any of my open source tools or you just want to read random things. :-)

This post includes personal updates and some open source project updates.

Personal things

¡Buenas! from Madrid, Spain, once again, where I have been for a while now. I could have never imagined Madrid with London or Berlin weather, but I guess that’s the reality we’re living in today. Most of the time the city has been below average in temperature, gray, and rainy, unfortunately. It was only in the past days that it got sunnier and warmer. Nevertheless, tourism is still thriving in Spain’s capital, with English slowly becoming what feels like the official language out on the streets.

With the weather being the way it is, I got to spend more time than I anticipated indoors. Looking at it positively, though, I was able to advance some of my open source tools. If you want to skip forward to those, you can find all updates at the end of this page.

The past months have been truly great and I have to admit that I would have loved to stay even longer in Asia and (re-)visit a few more places (e.g. Seoul and Bangkok). The only things I sort of missed were maybe my desk and a few other belongings that I couldn’t take with me on my travels. And as much as I love my own coffee equipment, neither Japan, nor Spain left me longing for it at any moment.

Speaking about coffee, there were two highlights that I’m especially grateful for. The first one is a roast of coffee that I discovered in Osaka when visiting Glitch Coffee, located on the ground floor of the 200m-tall Nakanoshima Festival Tower West. The coffee is from Colombia, specifically the La Loma farm, and it’s processed using a method that involves aging the coffee in actual whiskey barrels:

For this batch, only high-brix coffee cherries were selected. They were fermented in a tank with ten types of microorganisms based on wheat, sorghum, and corn, along with a small amount of whiskey. After fermenting, the mixture was rested in a cellar for 20 days before being aged in oak barrels previously used for whiskey production for 30 days.

(via Glitch Coffee)

After trying the first cup at Glitch, I was intrigued by the flavor and bought a 100g jar of beans of this coffee. However, I couldn’t get my mind off of the flavor, which is why I ultimately ended up visiting the terribly overcrowded coffee shops of Glitch in Tokyo and getting as much of this coffee as possible. I ended up buying half a kilo of beans, which happened to be the last batch Glitch would have of that specific coffee. At over $120 it was probably my most expensive coffee beans purchase ever, yet I don’t regret a single penny. By now I have unfortunately finished the 100g jar that I bought back in Osaka, and even though I would love to rip through the 500g bag right now, I decided to preserve it for special occasions.

The second highlight was going back to what’s probably my absolute favorite coffee shop in the world: Bear Pond Espresso.

I visited the main location in Kitazawa Setagaya-ku, as well as the On the Corner No. 8 one back in 2018 and fell in love with the Cappuccino. Unfortunately, the No. 8 location closed sometime in 2019. However, their main location is still around and just as great as I remembered it. Even though there are coffee beans that I find way more interesting – see above – the flavor and more importantly, the consistency of Bear Pond is unbeaten. I went to Bear Pond on a nearly daily basis while in Tokyo and got served a perfect cup of Cappuccino every single time. Obviously, I also took half a kilo of their Flower Child roast, as well as some memorabilia that will hopefully keep me happy until the next time I get to visit Bear Pond Espresso. :-)

Site

My journal changed a little over the past months, as many regular readers might have noticed. The probably biggest change is one that has been requested multiple times: The navigation.

In the past, readers found it unintuitive to have a large navigation at the top of every page. So much so, that when one of my posts ended up somewhere in a forum, people sometimes commented saying that they’re unable to see the actual post. It turned out that they were using small laptop displays and didn’t realize that they could scroll down past the navigation to see the actual post. This is when I had to implement a blinking text underneath the logo that said scroll down.

Anyway, as conversations about the navigation menu kept coming up, I eventually put some time aside to think about how a new navigation could look and implement it. You can see the result right now. To make the whole menu smaller in size I had to remove all the individual post listings for Journal, Travel, Make, and Updates. I instead created individual navigation items for these collections. This allowed me to use up all the horizontal space for the primary navigation. The downside is, though, that it’s not possible to see and directly navigate to the latest six posts in each collection anymore. Instead, readers have to click on the navigation item for the specific collection (e.g. Journal) first, and can then see and navigate to the latest items within that collection. This adds one more page load and click to the flow. To make up for that, I made sure to make the site more lightweight and thereby faster – more on that later. To finish it up and make everything compact I moved the QR code for individual pages to the bottom of the page.

The navigation now spreads horizontally across multiple columns, depending on your browser width. On a desktop you should be seeing four columns, while on most mobile devices the items will split across two columns. There’s also a three column in-between size for tablets and the likes.

Another change to the menu concerns the active page. The navigation will now highlight the currently active page if that page has a navigation item. This has been a bit of a hacky thing to do because by default Hugo isn’t able to tell whether a collection page is active. What I ended up doing is this:

{{ $currentPage := . }}

...

{{ range $.Site.Menus.primary }}

<li class="{{if or ($currentPage.IsMenuCurrent "primary" .) (eq $currentPage.RelPermalink .URL) }} active{{end}}">

<a href="{{ .URL }}" target="_self">{{ .Name | markdownify }}</a>

</li>

{{ end }}

...

The first condition works for regular pages that have a menu entry, the second

compares the current page’s RelPermalink with the menu item’s URL, which

works just fine for collections and the likes. Using this, when you now click on

e.g. Travel to navigate to the travel page, the navigation item will remain

highlighted for as long as you’re on that page.

Speaking of Travel, I have completely restructured the travel posts. Ideally you won’t notice any of it as I’ve tried to preserve all URLs. However, the whole travel-section is now a page, rather than a mere category. It lists all countries, which are also individual pages, and within them each city. This way I am able to link to individual countries, e.g. Japan, and have a collection of individual cities that I published travel content of.

I’m still due to edit and upload older travel photography, especially from before I even had this website online, but that’s something that I’ll be doing after I managed to implement a proper photography workflow.

Another thing that you probably have noticed, though, is that the images on this website look different now. This is because I completely refactored the image shortcode that I am using. I consolidated the different image types, changed the thumbnail image format from JPG to WebP, and increased the compression in an effort to further shrink the data being transferred when browsing this site. Unfortunately, with this change, the website became less compatible with older browsers. If you happen to use a major browser in a version that is older than ten years, you might not be able to see any photos any more. However, given the absolute can of worms that web browsers are in terms of security issues, you are likely to have more severe problems than missing pictures on a day-to-day basis.

In addition to changing the image format and increasing the compression, I made

use of Hugo’s relatively new dithering feature to hide the

lower image quality behind a stylistic choice. Or in plain English: The lower

quality preview images look like potatoes, so I applied a low-tech-style

filter to mask their potatoness. When you click an image to open the original,

however, it is still the same, heavily imager-crunched, yet still

ginormous (in many cases) 4K, JPG image that it had been before.

All in all, I was able to decrease data use by around 33% on average, with even higher rates throughout pages on which the previous image shortcode implementation would mistakenly embed the full-size source image – ouch! On top of that, I limited pagination to six posts per page, further decreasing data use of post lists.

The Small Web

“So you can finally join the 250KB 512KB 1MB club!”

Depending on which six latest posts are being displayed on the homepage, I probably could, yes. However, this statement already shows the inherent issue with these clubs: While their mission is something many people (including myself) can get behind, their entry requirements, unfortunately, fail to support that mission.

Their mission statements include phrases like “The internet has become a bloated mess. Huge JavaScript libraries, countless client-side queries and overly complex frontend frameworks are par for the course these days.” and “The Web Is Doom”. Yet the sole reason this site is not eligible for e.g. the 250KB club is the photography displayed alongside the content, which, in my opinion, adds value to the written word. Even though some of the pages on this website might transfer several Megabytes of (image) data, I would not classify the site as a bloated mess. Clearly, taking solely the size of any given page – especially the homepage – into consideration isn’t the best idea. This approach leads to clubs that feature websites, that in many cases lack any useful content and are often only placeholders. Many of the websites say something like “Hi, this is me, check my main website here”, with a link to a much larger website that by itself would have never made it into any of those clubs’ lists.

While there happen to be a handful of gems within these clubs, I don’t think a

limitation by size – or even by technology – is the right approach. My

website uses some JavaScript, to display a handy QR code above below every

page, so you can take it with you by pointing your phone’s camera at it, and

it uses JS for the privacy-respecting analytics tool that I use. Is

it depending on JavaScript to function? Not at all! In fact, I invite you to

browse this site with JS turned off, as everything apart from the QR codes will

work for you. The nojs-club should rather be the novitaljs-club.

Anyhow, besides these clubs, I also don’t have the feeling that

Marginalia and Kagi’s smallweb turned out the way most

people anticipated they would. Both directories now include sites running on

e.g. Blogger and WordPress.com, or embedding social media

(Facebook, Instagram, Twitter X) snippets and the likes. If we apply the

core principles of what is usually referred to as the small web/indie web,

we can see that these sites aren’t a good fit:

- They don’t own their data, at least not in its entirety.

- They’re not intentionally built for longevity, considering that they are at the mercy of businesses and platforms that might shut their doors at any moment.

- They’re not truly decentralized as they’re not independently run, or at least run in a way that would allow them to easily move operations to a different host/platform.

Although I don’t have a better idea of how to solve the issues the aforementioned webrings are trying to tackle, I nevertheless don’t feel like putting in the effort to join and maintain a <size>/no-<tech>-club membership. Especially because I don’t believe it increases discoverability, and instead serves primarily as a badge of honor. This site is already listed on Kagi’s smallweb, as well as Marginalia, and even traffic originating from these sources makes up less than 1%. I believe that not a large group of people that are interested in the small web, and hence stumble upon this site, to begin with, use either of those platforms to do so. However, this is purely speculation based on my own experience.

std.debug.print("Nu language, who dis?\n", .{});

In other news, I’ve been slowly but steadily diving into Zig, after having spent the past years working primarily with Go, alongside of Python, Elixir, Ruby/Rails, a little bit of Rust, and some TypeScript with Svelte/SvelteKit when necessary. It is a pleasant change to be back in lower level systems programming and a lot of the finger exercises I did in Zig were reminiscent of times I worked a lot more with (embedded) C and C++.

I’m particularly excited about Zig’s future in the area of microcontroller development. While I always enjoyed working with C, and especially RIOT for MCU development, I can still see how Zig’s comptime execution, its compile-time memory safety checks, the error handling and the easier toolchain with cross-compilation baked right in can benefit the development flow.

On the downside, however, the Zig language and its ecosystem at this stage is

still changing a lot. This leads to a lot of the information one finds online

being outdated and non-functional with the latest release of Zig. One example of

this that I have encountered is the MultiArrayList, which appears to have

changed multiple times over the past years, leading to many examples that one

might encounter online not being functional any longer.

Another thing is the lack of proper interfaces, which is rather unfortunate.

Even though the sort-of-interfaces that exist, such as std.mem.Allocator and

std.io.AnyWriter get the job done, I find the approach rather hostile to work

with. It would be nice to have syntactical sugar on top of the

@ptrCast-@alignCast-*anyopaque that is often used to implement interfaces.

With tagged unions, on the other hand, all implementations have to be known

ahead of time, which might not be feasible in many cases. Regardless of these

minor annoyances, I’m going to continue dipping my toes into Zig.

Why no more Rust?

For a long time, I was looking to learn another low-level language, and while I tried getting into Rust on several occasions, I could never really make it stick. I think that the Rust language offers a great set of features and I also think that Cargo is the best package manager – and I’ve worked with NuGet, NPM, Hex, and plenty of others. Many of my favorite CLI tools are built in Rust and I envy everyone who’s able to dig into the language and build amazing things with it. However, I came to realize that I simply don’t like Rust, and no matter how often I read and write it, I cannot get myself to truly enjoy it. I enjoy challenges, hence Rust’s steep learning curve has never been a barrier for me. However, I could never make developing in Rust fun, as I felt being artificially held back and slowed down by how Rust expects me to write code. In addition, I find Rust code very unpleasant to look at. Beauty is in the eye of the beholder, and to me, Rust is as ugly as C++ – if not worse.

Besides, the Rust developer communities appear to be heavily centered around Discord – which I find equally problematic from a privacy, as well as a usability perspective – and the idea of rewriting everything in Rust, which at times feels a bit aggressive. I also found it hard to identify local Rust communities around the globe, given that more than two-thirds of the people representing the Rust community appear to come from only ten countries. Besides, Rust, and to that extent its community, appears to be somewhat into politics, at least more than I’d personally prefer it for a programming language to be, and most professional engagements involving Rust these days appear to be for Solana smart contracts development, which I have little interest in.

Anyhow, I’m looking forward to trying out more things using Zig from now on and hopefully using it professionally at some point.

Keyboards

Starting with some news about one of my favorite keyboard shops – RAMA – it looks like the story continues the way everyone at this stage foresaw it: RWH Pty Ltd is liquidating. Following the initial ASIC notice, the appointed liquidator as well as RAMA published statements about the situation.

Even though the statement ends with the phrase “Until next time,”, the reputational damage is done and the RAMA brand is highly unlikely to be seen in the future – especially if rumors are true and most of what happened did originate from the founder mismanaging the company, for his profit and an extravagant lifestyle that he supposedly led.

Many people with outstanding orders will likely be left holding the bag. While

groupbuys never come with guarantees, RAMA appeared to be doing fine when

people ordered the KATE CAPS keycaps and the next iterations of their

keyboards. There were no indications whatsoever that the company might go bust.

Even though I don’t have any outstanding orders with RAMA, this whole situation

has been a good lesson for me to look at groupbuys with even more skepticism

– particularly when the vendor is out on their own, trying to do things like

creating a brand new keycap profile.

However, with the team around RAMA having left a long time ago, the founder, Renan Ramadan is now apparently following a new career path at Perplexity AI, a search engine that uses large language models (LLMs) to answer queries using sources from the web. Everyone understands that the show must go on, however, it is mildly infuriating how none of the topics at hand have been addressed on the company’s website or any of its official social channels up until RAMA was supposedly forced to by law. Bad things happen, and whether it was mismanagement or simply misfortune that led RAMA down this path, things could have been handled significantly more transparently, to not leave a community of salty RAMA customers behind.

As for the content of RAMA’s official statement, some people call it, quote, “Signs of a narcissistic egomaniac”, and, quote, “Outrageously out of touch”, others summarize it more visually; I, for one, will still withhold an opinion on the whole RAMA DRAMA until the legal battles are over and all sides can clarify what truly happened that, in their views, led to this downfall.

Regardless of that, I think it’s time to say R.I.P. RAMA WORKS. Everyone who owns and plans to hold on to their existing RAMA keyboards might find this comment from Wilba interesting and worth bookmarking. It would be great to still be able to purchase replacement PCBs for RAMA boards. I’m storing mine in a high-temperature, high-humidiy, sea-salty environment, in which most electronics fail after only a few years. Having replacement PCBs available would definitely help longevity.

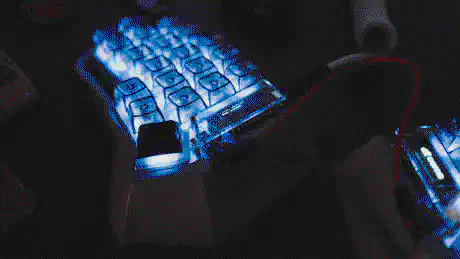

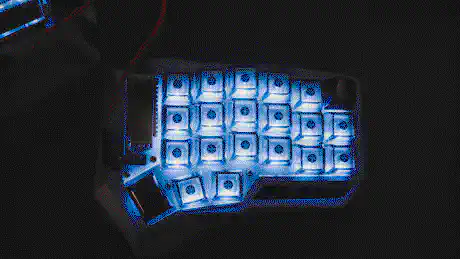

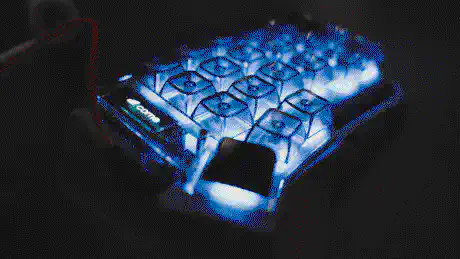

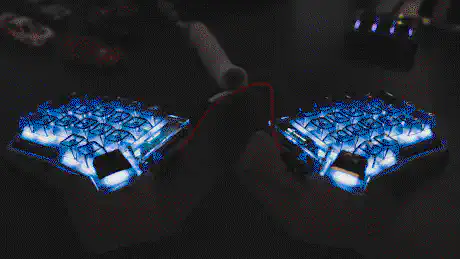

Binepad BNK9 Macropad

"Yet another macropad?", you might be wondering. Indeed, it seems it’s getting out of hand. However, as I’ve been eyeing the Binepad for a while now and Drop happened to have it on sale back in October 2024, I decided to give it a go.

Initially Drop estimated the shipping date to be the 27th of December 2024, which was fine by me as I wouldn’t be around anyway and would only get to pick up the package around Q1/Q2 of 2025. However, as I didn’t get any notification for the package from my forwarder up until the end of January, I decided to reach out to Drop’s support and ask for an update on the shipping date. Here is the full interaction with Drop (descending chronological order):

Amy V (Drop)

Jan 22, 2025, 12:33 PST Hello,

We apologize for the wait. Our internal team is following up with the vendor and we will notify all customers of the new ship date as soon as possible.

—

Jordan (Drop)

Jan 20, 2025, 13:16 PST Hello,

Thanks for contacting Drop Community Support! We apologize for the wait. I will check on this with our team and get back to you with an update as soon as possible.

If you have any other questions, please let me know.

Warm regards, Drop Support Team

—

Marius

Jan 19, 2025, 06:05 PST

Hey there Drop Crew,

Could you give me a heads up about the shipping of the BNK9?

Thank you!

One day later, I received another update from Drop:

Another quick update regarding your Binepad BNK9 Macropad.

We wanted to let you know there’s been a continued delay in the fulfillment of the group’s order and as of now the new estimated shipping date is February 7th.

We apologize for the delay and appreciate your patience while we work to get everything shipped as quickly as possible. As usual, you may review your order at any time by going to your transactions page here: https://drop.com/transactions

Please feel free to reach out if you have any questions.

Sincerely, The Drop Team

At this point I began looking online for further info on this specific macropad and found out that there were at least two different groupbuys for it that took place at the end of 2023 as well as in early 2024. Drop’s sale was not advertised as groupbuy, hence I assumed that they would have the devices readily available at the initially communicated shipping date (27th of December 2024).

The lack of further information, reviews and the imprecise communication from Drop led me to cancel this order eventually. There have been too many pre-orders and groupbuys lately that went sideways in the keyboard space.

Also, to be fair, I’m quite happy with my RAMA M6-C. Even though it doesn’t have the rotary encoder, which would be useful for changing the volume of different audio in- and outputs, it’s nothing I necessarily need.

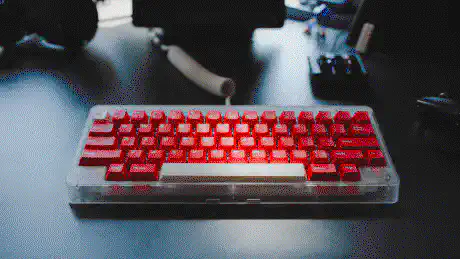

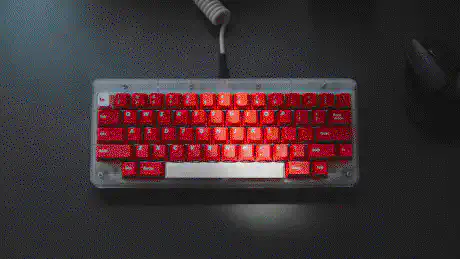

LeleLab Supsup Venetian Red

I stumbled upon the LeleLab Supsup one day when I was sitting in Brooklyn, slurping a coffee and browsing zFrontier on c4l1c0. The moment I saw the keycap set I knew that it would look supreme on the RAMA (R.I.P) KARA ICED.

I’m a fan of Barbara Kruger’s word art and the influence it had on modern

culture – specifically the Supreme brand, with its now iconic red box logo

with white Futura Heavy Oblique font. Even though Supreme has become a

mainstream fashion douchebag brand owned by the behemoth VF

EssilorLuxottica these days, having been part of the skateboarding community

of the 90s myself, the visuals still provoke a sense of nostalgia in me.

The Supsup keycap set from LeleLab is clearly piggybacking – pun intended – on the famous aesthetics. Even though I found the Classic Red and the SuperX White keycaps more interesting, I decided to go with the Venetian Red variant, to not overdo it with translucency. On a MILK KARA any of the former two sets would have been my preferred option, yet on my ICED keyboard the Venetian Red look like the better fit.

Without further ado, I present to you: The RAMA (R.I.P.) KARA HyPeBeAsT Edition

As I’m primarily using the Kunai, this build was purely artistic and serves mainly as a decorative object. Some people use wooden birds as decoration, I use keyboards. However, in case LeleLab ever decides to make their keycaps in a different profile – say, KAT, KAM, MTNU, or PBS – I would totally rock them on the Corne.

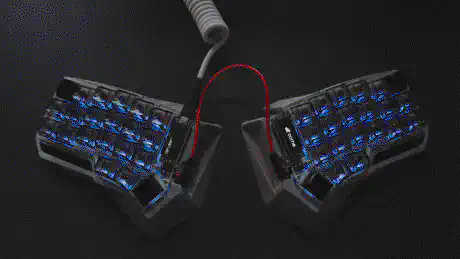

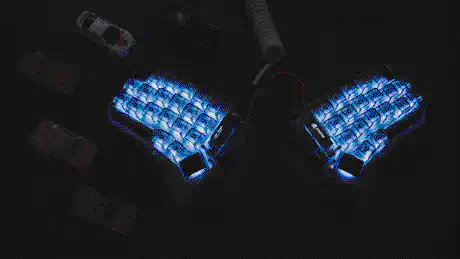

Elacgap XDA Transparent Black

I found another set of keycaps, this time on Amazon, that I wanted to give a try on the Kunai. I love the look and the profile of the glossy black KAM Blanks R2, that I had on the Corne ever since I built it. However, I felt like the black transparent keycaps would give the keyboard more of the AM HATSU aesthetic that I was originally going for.

The 38 XDA keycaps that I needed to equip all but the thumb and the upper left and right corner keys cost me a little over $20 and look quite nice. As the XDA profile is fairly similar to KAM, the typing experience is pretty much the same. With the XDA keys having the same thickness as the KAM Blanks, the sound is also fairly similar – not that the Kunai with its Gazzew Boba U4 switches is particularly soundy to begin with.

To be completely honest, though, the keycaps are more for the show than actual use. Just like the KAM Blanks, they get smudgy in an instant, especially in hotter/higher humidity environments, making the keyboard gross to look at and type on. Hence, most of the time I rock a thick, textured PBT keycap set on the Kunai, like the biip MT3 Extended 2048 for example.

On a side note: There’s a lack of textured PBT KAM keycaps and given the little interest from keycap designers towards the KAM profile, I truly hope that PBS will eventually take that place. I’m in fact looking forward to the first reviews of PBS Aperture Priority and might purchase a set as soon as it is in stock.

Infrastructure & hardware

ISP

Even during the brief period that I have been home, the previously reported connectivity issues with my ISP continued in a way that made the broadband connection unusable – especially using any VPN. Luckily, the Starlink is having little to no issues at all.

h4nk4-m

The Ultra-Portable Datacenter has been up and running ever since I finished the device and I didn’t have any issues with it so far. Every once in a while I can see undervoltage messages occurring, even though I’m using a dedicated (unofficial) Raspberry Pi PSU that delivers 5.1V/5A, 9V/3A, 12V/3A, 15V/3A, and 20V/2.5A with a maximum of 45W and comes with an integrated USB-C cable:

hwmon hwmon5: Undervoltage detected!

hwmon hwmon5: Voltage normalised

hwmon hwmon5: Undervoltage detected!

hwmon hwmon5: Voltage normalised

hwmon hwmon5: Undervoltage detected!

hwmon hwmon5: Voltage normalised

I will give the Geekworm DC 5521 60W 12V 5A PSU a try at some point, as it seems to be the official PSU recommended for the X1202 and I assume that the additional hardware might be the cause for these undervoltage messages.

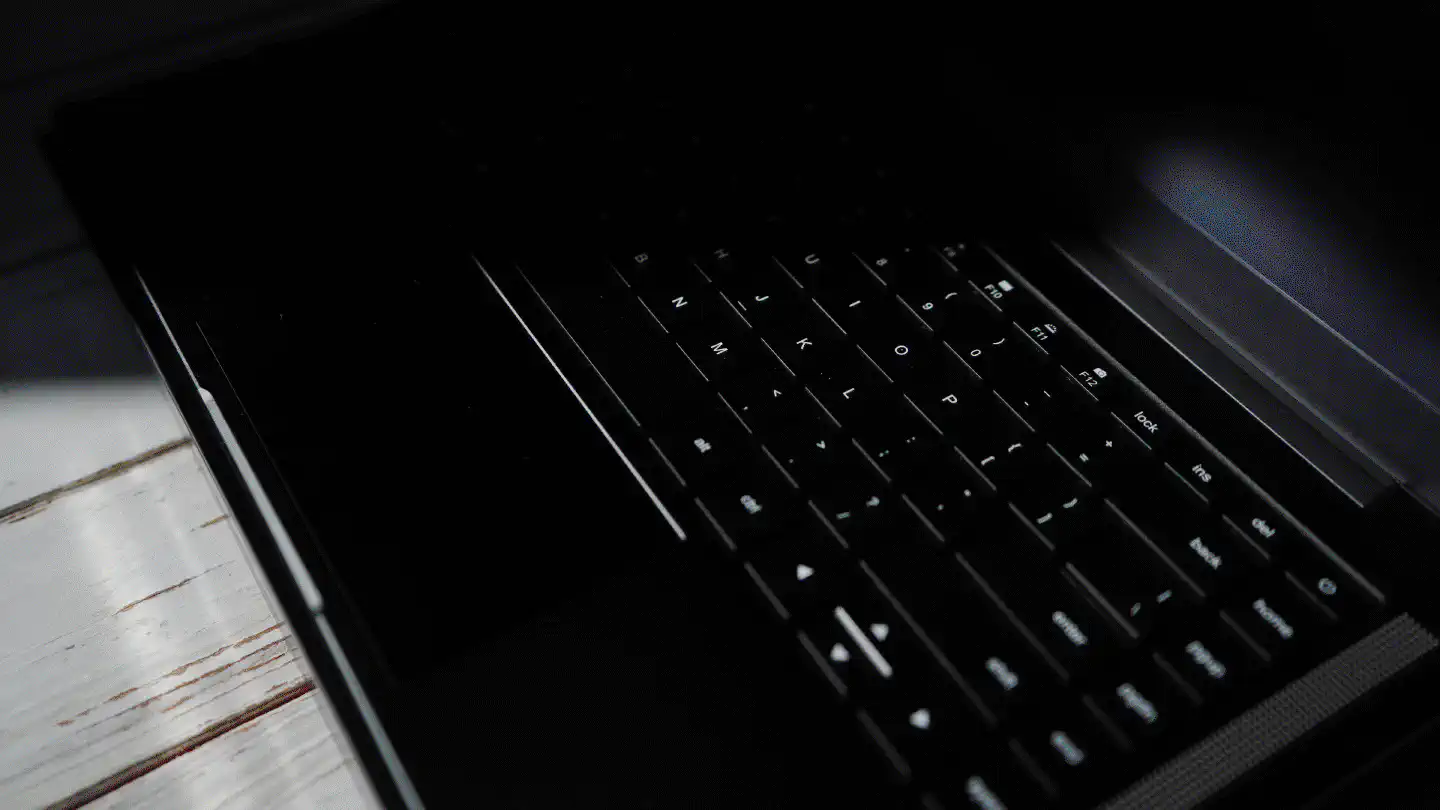

Star Labs StarBook and StarFighter

Pretty much one year ago I made the decision to switch from my main workstation and its sidekick to a more portable, compact and modern laptop: The Star Labs StarBook Mk VI with AMD Ryzen 7 5800U processor.

As mentioned in my brief review of the StarBook, as well as its hardened Genoo documentation, the 14" StarBook was supposed to be a temporary solution for the time being, until a second or third generation of the 16" Star Labs StarFigher would become available one day.

However, little did I know how long it would take Star Labs to make the first generation of the StarFighter available to begin with. While the device was already configurable in their online store when I ordered the StarBook one year ago, it would not be available for order. Fast forward one year and the StarFighter is still estimated to dispatch in 3 to 5 months. Pessimistically speaking, the first-generation StarFigher will likely hit the market in mid-2025. At that point, it begs the question of whether the device will still be relevant to anyone, given that its AMD Ryzen 7 7840HS is a Zen 4 processor launched in January 2023. That’s a two-and-a-half-year-old processor at the time the machine will likely be delivered.

Considering this timeline it’s understandable that even the most hardcore Linux enthusiasts begin doubting Star Labs and rather look for alternatives in the System76, Slimbook, and Framework camps. Especially considering that the promised Coreboot firmware for AMD devices – specifically the StarBook – is still nowhere to be seen, even two years down the road.

While the folks from Star Labs clearly weren’t sleeping for the past two years, as the latest StarBook iteration shows, the release of this Intel-only machine was yet another setback for what is left of the AMD Linux community that still had hopes for Star Labs. The new StarBook is everything the AMD StarBooks should have been: A 14" device with a 625cd/m² bright 4K display, Coreboot and EDK II, up to 96GB DDR5 RAM, WiFi 6E, and Bluetooth 5.3, and more than one (two) USB-C ports. A device with those specs, but with an up-to-date AMD processor, could easily become a T14-killer and thereby a Linux user’s new favorite machine.

Anyway, with the heavy delays impacting the StarFighter availability and presumably future iterations, I lost hope to one day upgrade from my StarBook Mk VI to a StarFighter Mk II or Mk III. Instead, I’m beginning to look towards machines like the System76 Pangolin, which received a refresh a few weeks ago and now sports an AMD Ryzen 9 8945HS, a 16.1″ 2560×1600 120 Hz display with a matte finish, and up to 96 GB dual-channel DDR5 memory. In addition, the Pangolin features not only one but two M.2 PCIe Gen 4 storage sockets that allow up to 8TB per SSD. And it has two USB-C ports, out of which one is USB 4.0.

I do not need an upgrade at this moment, though, and to be fair I’m still happy

with the StarBook Mk VI. As a matter of fact, I came to favor the 14" form

factor over the clunkier 15"+ devices that I had in the past. However, with more

workloads requiring a somewhat powerful GPU, forcing me to for example use

Ollama running llama3.2-vision:11b on my workstation rather than on the

laptop, due to the workstation being 20 times faster than the StarBook,

despite not having a compatible GPU, I concluded that at some point an upgrade

will be necessary.

My long-term plan is to have a decent AMD iGPU on the go, and pair the setup with an eGPU – connected via USB 4.0 – when stationary. However, even though the laptop’s Zen 3 processor is now three years old, these plans are still in a distant future, as long as the StarBook holds up another year or two. I find it nevertheless sad to see the progress – or the lack thereof – of the StarFighter, as well as the overall Coreboot situation.

EVGA XR1 Pro Capture Card

If you happen to be one of the little over 150 subscribers to my YouTube channel, or if you follow me on Odysee, you might have noticed that I haven’t posted anything in quite some time now. As you can imagine the typing videos, in which I’m testing different boards with different keycaps and switches, are surprisingly high effort for such a relatively small outcome. They also require a continuous influx of new keycaps and switches, which goes against the rather minimalist life that I’m striving for.

The more informative videos, like installing OpenBSD or setting up a Wireguard VPN, however, were in comparison a better value for the invested time. Unfortunately, post-production had been a big PITA for me, as I did not have a way to simply turn on my camera and my audio equipment and hit record. I was using a relatively cumbersome workflow consisting of in-camera footage, separate audio tracks recorded in Ardour, and a manual process for merging these two in post, in DaVinci Resolve.

The main reason for having to record in-camera and merge the video with the audio tracks later one is, because the Sony A7 does not stream video in 1080p through it’s USB-C webcam feature, but instead only outputs 720p. Hence I was never able to just use OBS to record decent quality HD video alongside the high quality audio from my MOTU and RØDE microphones and only do some color grading in post.

Another big PITA is Ardour, as it still depends on GTK2, which is heavy on X11 dependencies. Ever since going Wayland all-the-way I was unable to run Ardour and I didn’t expect things to improve.

I found an external EVGA XR1 Pro USB capture card on sale and decided to give it a go. The EVGA is plug-and-play on any modern Linux system and works flawlessly with OBS. By connecting the Sony A7 via HDMI to the XR1 HDMI input, I’m able to get full HD directly from the camera and feed it into OBS, together with the high-quality audio from the MOTU. In OBS I’m then able to record and even live stream the sessions, making it a lot easier to create such content.

Anyhow, I’m looking forward to giving the camera setup a first try very soon, so stay tuned for new video content!

EMAY EMG-6L

As mentioned in the previous update, I have upgraded from the Garmin Instinct 2X Solar Tactical to the Garmin tactix 7 AMOLED, primarily because of the ECG feature that the tactix brings. Having an ECG on the wrist makes it easy to take a quick reading at any time, which is great. As it is only a single-lead electrocardiogram, however, it does not provide as much detail as a 6-lead or even 12-lead ECG. In addition, it turned out that the ECG feature is intentionally being blocked by Garmin in 80% of the world, requiring some tedious workarounds to get the feature activated – which I was able to do, yet the situation really humbled my excitement for the tactix 7.

Hence in addition to the ultra-portable single-lead Garmin wearable ECG, I have also got a dedicated 6-lead ECG that is relatively portable, yet a little bit more cumbersome to use and hence meant for only specific situations.

Even though my cardiologist suggested a Kardia device, I decided to try a cheaper alternative that would not require a monthly subscription or a cloud service to begin with. I stumbled upon the EMAY EMG-6L, which appeared to have relatively decent reviews and would not require any cloud service to retrieve ECG recordings as PDFs. In fact, the device functions just fine without any smartphone or PC attached and only requires connecting an external device via Bluetooth or USB for exporting recordings. This device is a useful addition to my workout equipment.

If you are on the lookout for a portable ECG and don’t feel like spending a significant amount on a smartwatch with integrated ECG functionality, or locking yourself into a subscription-based cloud service, then I can recommend the 6-lead portable ECG from EMAY.

Standard Products USB Cup Warmer

Back in Osaka I picked up a USB Cup Warmer from Standard Products – a Daiso brand – for 550 JPY, roughly 3.50 USD. The device is as simple as it gets, featuring a single button to turn it on and off, as well as a red LED next to it that indicates its state.

According to reviews – yes, apparently even a product like this gets reviewed by some tech magazine these days – the surface gets to about 70 degrees Celsius and works best with stainless steel mugs that have a flat bottom. My measures however indicated temperatures closer to around 55°C on the surface, with a porcelain mug half full with water on top.

For what its worth the product performs totally fine, regardless of the cup. After placing my cup on the warmer I usually wait around five minutes for the coffee to cool down to a drinkable temperature, at which point I turn on the warmer to slow the cooling of the coffee to a degree where each sip is still reasonably warm.

The only downside of the device is the button. While the cup warmer’s red LED is on when the device is running, it is too small and dim to notice. This had me forget turning the device off several times already. I wish the cup warmer would have had one of those old, rectangular, red coffee machine switches that would illuminate when turned on.

To prevent this from happening in the future, I might get a USB hub that

supports per-port power switching and use uhubctl to turn the cup

warmer on and off via a keyboard/macropad shortcut and a timeout.

Tl;dr: 5/5, would recommend.

The elephant in the room

Back in February my post on why you don’t need a terminal multiplexer on your desktop was posted on Hacker News, and from there across various other platforms. I noticed it when my monitoring triggered an alarm due to Hacker News’ infamous hug of death. However, the site remained online throughout the whole time, which was nice to see given that I had just migrated to Bunny CDN some time ago. The whole hype lasted for four days and consumed around 53 GB of bandwidth.

While the reactions across different platforms appeared to be relatively neutral, with some even being genuinely kind, some commentators on Hacker News seemed to have taken things personally – so much so, that even people without an account apparently went the extra mile of creating one just to be able to comment on the HN thread. That’s some dedication to Tmux!

I, therefore, updated the post to include an apology to those who felt insulted by either the sarcastic tone or the content of the post. Everyone has their workflow that grew on them and that’s totally fine. But the person who’s been using Tmux for the past 20 years as an integral part of their workflow is probably not the person partaking in the influencer-driven trend of Tmuxifying the terminal. This was the main issue that the post intended to criticize: Doing Tmux because of a trend. I wanted to provide an opposing perspective to this trend, pointing out that the top arguments listed in the respective content pieces don’t quite check out and that there are alternatives.

However, one thing that I seemed to have failed to provide is an alternative for every single reason why someone might use Tmux. All while simultaneously not making the post an even longer content piece, that most people found tiring to read through to begin with. Commentators would often write why my post was pointless because it didn’t cover their specific use case.

To be fair, they’re right. I could have added the example of how Tmux can open

new panes in the same directory that you’re already in, but so can

terminals without Tmux. I could have added another example of how

people use tmux-fzf or similar scripts to manage their sessions/workspaces,

windows, and panes, and how window managers

can do the exact same thing across all windows. Or how

zoxide does a lot of the workspace/path magic that people seem to

customize Tmux to do, without the overhead that being a terminal multiplexer

brings. But would that have changed the perspective of anyone riding the Tmux

trend? I’m doubtful.

Long story short, even after reading through many of the comments advocating for Tmux, I still don’t agree with the Tmux bandwagon. On the contrary, I find it boring and uninspiring how topics like these are being parroted instead of showing alternative ways to achieve the functionality that people might be looking for. If you have been using Tmux virtually forever and you’re happy with it, go ahead – you weren’t even the target audience of the post, to begin with and most likely willingly inserted yourself into it to stir the pot. If you are new to all this and are just following the Tmux cult, please know that Tmux has its disadvantages and consider that there are different ways of doing things that might turn out to be working better for your specific workflow. Just because everyone and their dogs have been using Tmux for the past decades, doesn’t mean there’s no other, possibly better way moving forward.

The most dangerous phrase in the English language is “we have always done it this way.”

– Grace Brewster Hopper

Open source projects

I invested quite some time in pursuing my open source projects in the past quarter, hence there are a few updates to share.

Kopi

At the beginning of March, I published a new tool called Kopi. It is a command-line (CLI) coffee journal (or habit tracker) designed for coffee enthusiasts. It lets you track coffee beans, equipment usage, brewing methods, and individual cups. In case you missed it, you can check the full post here.

Since the initial release it received a few bug fixes and improvements, but it is still in its early stages. I recommend checking it out if you are looking for a coffee journal that allows you to track your coffee preparation and consumption with lots of details, and that even has a mobile app. ;-)

Head over to GitHub if you want to know more.

zeit

zeit received a big chunk of features and fixes thanks to the great work done by Martin.

It’s beautiful to see how over four years after its initial release the tool is still being continuously improved without becoming a feature creature. While many things in the background changed over time and individual commands are now more polished than ever, zeit still features the very same minimalist look and feel that it had when I released it back in 2020.

reader

reader also received some love, with dependency updates and a workaround for an issue concerning the CycleTLS library it uses to request web pages. A new version is available on GitHub, as well as on NetBSD, thanks to pin!

bfstree.zig

As a small exercise in Zig I implemented a Breadth-first search library that I’m planning to use in a Zig CLI that I’m currently still working on. It’s a small implementation but it touched on a few things that are interesting for a beginner like myself.

Check it out on GitHub if you’re curious.

Enjoyed this? Support me via Monero, Bitcoin or Ethereum! More info.